BING-JHEN HONG

In my last article on Nvidia Corporation (NASDAQ:NVDA), I mentioned that the company was set to ride a 30% growth reversion angle. Since then, NVDA has rallied 222% thanks to significant earnings growth, which has sharpened the reversion angle prominently. I believe that Nvidia will remain the leader in the rapidly developing AI accelerator market, as the need for GPU-powered solutions is crucial in the model training and inference processes.

I am still bullish on NVDA, and in my view, its share price (“SP”) could appreciate further by up to 15%, especially with the launch of the new generation Blackwell architecture. In this piece, I will go through the growth potential in data centers and gaming segments and try to present that Nvidia is more than reasonably valued when looking at peers’ comparisons, historical levels, and earnings growth.

Growth prospects in this valuation

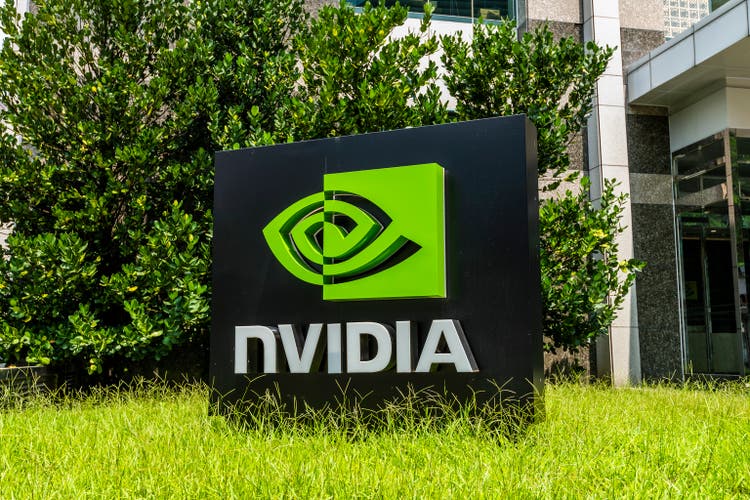

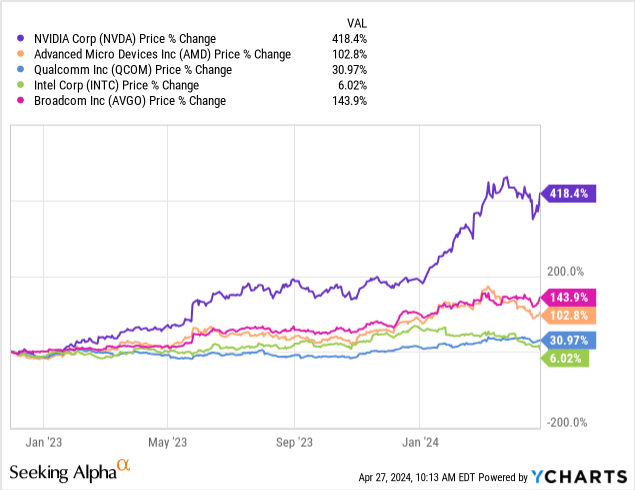

Nvidia has become the main beneficiary of the hype around artificial intelligence, as the stock has appreciated 418% since the launch of Cath GPT. Amid the rapid development of AI technology, which requires a whole new level of HPC for model training/inference, the company saw a significant uptick in demand for GPU-based solutions.

In addition to the record financials, NVDA reached record valuations, which were unsustainable for those who called it a bubble. However, the stock took a setback recently with a number of concerns behind that, including the pushed back Fed’s interest rate cuts, the disappointing order book from ASML Holding (ASML), and the lack of indication from Super Micro Computer (SMCI) about its March quarter financials.

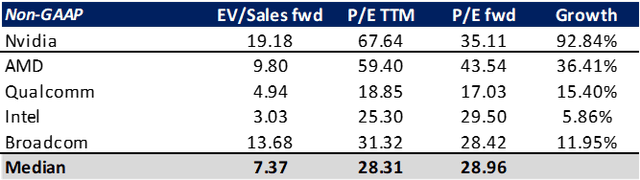

Looking at the valuation, we can notice that Nvidia is still trading at prominently higher levels compared to its peers’ EV/Sales and P/E ratios. However, in the case of NVDA stock, the direct comparison is not quite reasonable without considering the growth prospects.

Ratio analysis (Seeking Alpha)

The company is a clear leader in the GPU accelerator segment of the data center market, taking at least 80% up to 97% of it, according to some reports. And to gain some clues about the market dimension, let’s recall AMD’s projection of a $45 billion AI chip total addressable market, or TAM. Given the data center systems spending for 2023, it indicates up to 19% of the overall spending for the year. Moreover, AMD forecasted $400 billion TAM in 2027, citing a substantial increase in AI chip demand.

Given the above-mentioned numbers, a rough calculation points to around $40 billion in revenue from AI chips for Nvidia in 2023. And if we suppose that the company could retain at least 80% market share, it suggests up to $320 million in revenue by 2027, or 68% CAGR. Quite a bull case, isn’t it? Meanwhile, the analysts from BofA estimated TAM of about $180 billion, which implies a 38% CAGR.

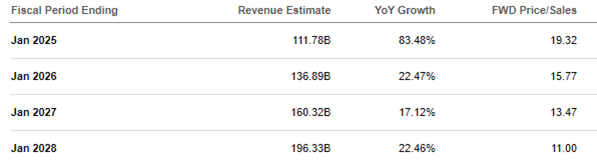

Analyst’s growth expectations (Seeking Alpha)

The latter growth rate seems reasonable for me, and it’s broadly in line with the analyst’s growth expectations. Meanwhile, the competition is also evolving. Advanced Micro Devices, Inc. (AMD) started shipping its new Instinct MI300X GPU recently, which could challenge Nvidia’s H100 in memory-intensive tasks like large scene rendering and simulation, while the latter has been in production for a year and excels in AI-enhanced workflows and ray-traced rendering performance. Intel (INTC) in turn, is expected to launch Gaudi 3 latter this year with 50% faster performance than H100 when it comes to certain language models. However, Nvidia remains in a strong market position, as the H200 chip should basically hit the market any day now, while the next-generation Blackwell architecture is set to launch later in 2024.

Another issue that should be discussed is the recent supply constraints for the Hooper architecture, which remains in strong demand, according to the management’s comments. Although the supply picture is reportedly improving, it has become a major bottleneck for technology companies and AI researchers who have to compete for AI chips. As a result, some hyperscalers started to seek in-house AI-accelerator solutions. In particular, Alphabet (GOOGL) is known for its own Cloud TPU (tensor processing unit), Amazon (AMZN) produces Trainium chips, International Business Machines (IBM) has AIU (artificial intelligence unit), Meta Platforms (META) utilizes MTIA (Meta Training and Inference Accelerator) internally, while Microsoft Corp. (MSFT) launched Maia AI accelerator last year.

Large cloud providers accounted for more than half of Nvidia’s data center revenue, and fears that they could start displacing Nvidia’s products for their in-house solutions are irrelevant in my view. Especially when there are a number of serverless GPU providers that could offer a distinct top-tier multi-GPU combination. And with the expected round of new GPU offerings hitting the market soon, I believe that hyperscalers will continue opting for Nvidia GPUs to retain dominance in the cloud market.

Concerning the management’s comments that the inference reached more than 40% of the company’s business, and given the price tag of Nvidia’s data center GPUs (Hopper is estimated to cost between $25-40k) and the estimated performance of the alternative GPUs, it undeniably raised concerns about the inference part of business. However, model training is a continuous process that should be run consistently due to the rising sophistication, upgrades, and bug elimination. So, the more data is generated through the inference, the more data should be processed by the model, and the more powerful GPU solutions are required.

Another strong growth factor for Nvidia is that it remains the leader in the gaming market. The company reported solid consumer demand for gaming hardware during the holiday season, during which I also became an RTX 40 series user, retiring my old GTX card. With the new DLSS technology, Nvidia could dominate the market further since even budget gamers can now opt for the RTX 4050 card and enjoy Cyberpunk 2077 on ultra-settings with enabled Ray Tracing. At the same time, the demand for senior 40 series cards could be encouraged by the rapid proliferation of VR devices and high-resolution monitors. The entry level for which could be the RTX 4070 SUPER, which was recently released, and is the best overall GPU right now. Moreover, the market for AIB discrete GPUs is improving following the COVID device refresh cycle. The latest surveys revealed that graphics card shipments increased by 32% YoY in Q4’23 to 9.5 million units, making an increase for three quarters in a row.

Reversion angle

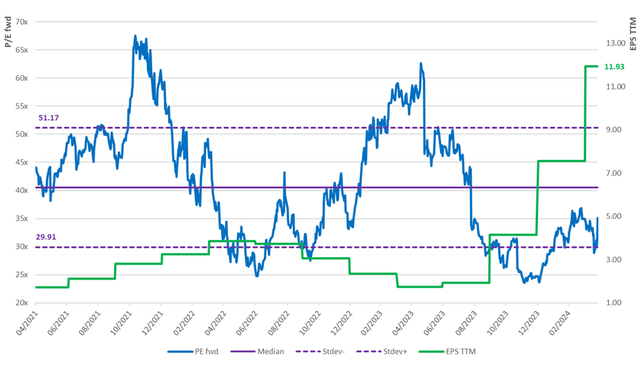

Since my last article, the valuation of NVDA has normalized significantly. The forward P/E ratio declined from 59.2x to 35.1x, something that consensus previously expected to happen in Jan 2026 fiscal year. Last time, I mentioned that a 30% growth reversion angle could bring the valuation ratio down to the low 20x in FY2028. However, from the current point of view, this could happen much earlier, as the reversion angle sharpened significantly.

Valuation of NVDA; P/E left; EPS right (prepared by author)

The chart above clearly delineates this pattern, as the green line (earnings per share) has climbed significantly, thanks to the accelerated growth of data center segment performance. This brought the company’s P/E ratio down substantially, which is now below the violet line, which in turn indicates median 3Y P/E at 40.5x. The two dotted lines represent one standard deviation above and below the median, and we can see that NVDA’s valuation has been hanging around the lower line over the last 6 months. We can also notice that there is a prominent way for multiple expansion towards the median line, which implies a 15.5% upside to $1 013 share price.

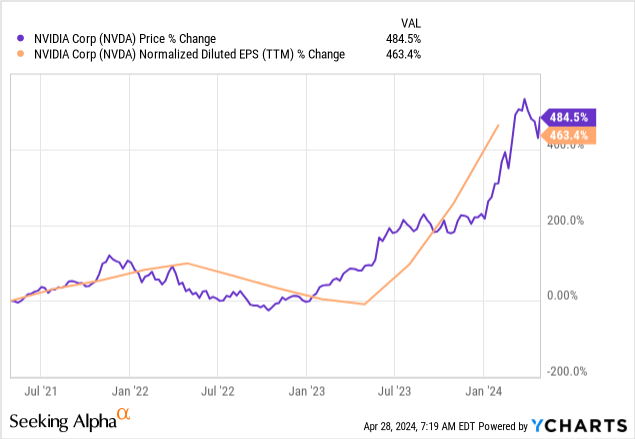

Before transitioning to the comparison with peers, I would like to clear up the doubts that NVDA has climbed to unsustainable levels.

Over the last three years, NVDA’s SP has appreciated by 485%, especially since the launch of ChatGPT. However, the earnings growth followed suit, up by 463% for the same period, thus justifying the company’s valuation from the EPS growth perspective.

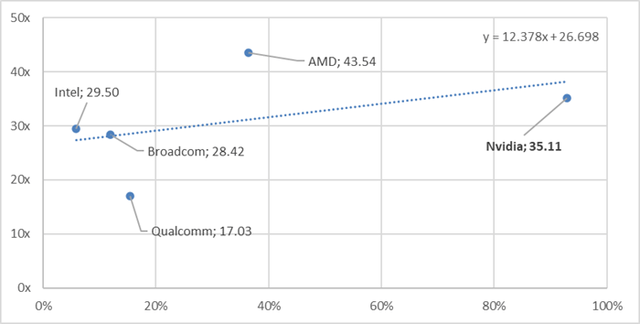

Now, compared to AMD, Intel, Broadcom (AVGO) and Qualcomm (QCOM), let’s see how Nvidia is ranking while incorporating future growth expectations.

EPS growth and P/E scatter (prepared by author )

Clearly below the trend line, suggesting that the company’s 90%+ earnings growth estimate more than justifies the fwd P/E level of 35.1x. Moreover, it means that to bring the company’s valuation in line with the trend line, NVDA’s stock price should appreciate at least to the $954 level, or by 8.8%.

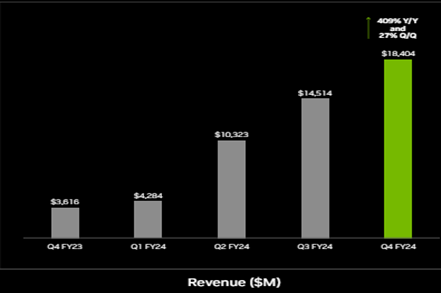

Finally, I would like to discuss the chart blow in order not to find ourselves in an insidious growth trap. Nvidia reported 5x YoY growth in compute revenue and 3x YoY growth in networking sales, and overall, a 409% YoY surge in data center LOB revenue.

Data center revenue (company presentation)

Previously, we mentioned that the segment has the potential for up to 38% CAGR over the medium term. But investors may not like to see such low numbers. The recent growth pattern from the chart is due to the low base effect from a year ago, and my point is that market participants should be satisfied even with up to 10% sequential quarterly growth in the data center revenue. Certainly, at some point, NVDA will come down to such rates due to the accelerated growth last year. And given that Nvidia has a leading market share in AI accelerators, we can easily gain insight into the data center cycle because such advanced and expensive GPUs are not usually going through the channel but are sold directly to customers. This means one-for-one impact, bypassing channel inventory, and less volatile market trends compared to gaming, for example. As a result, it’s not likely that we could witness a significant decline in sales, in my view, and the concerns may be raised only in the case of two or three quarters of sequential decrease in data center sales.

Risk factors

The tight monetary policy continues to put pressure on technology budgets. Nvidia is currently a leader in the AI chip market for data centers, while potential supply chain issues could result in stronger adoption of rivals’ products. Risks are also related to further tightening of the U.S. export restrictions in relation to China, which still remains a significant market for Nvidia. In addition, the company has risen strongly over the past year due to the optimistic forecast and positive trends in the AI market. If these trends are compromised by further deterioration of the global economic situation, investors may find themselves disappointed.

Investment conclusion

I am keeping my bullish call on Nvidia since the company delivered much stronger earnings than my previous piece suggested. NVDA is trading at a more than reasonable valuation, as evidenced by peers’ comparisons and EPS growth, offering up to 15% additional upside at current levels.

I believe that Nvidia will remain in the leading positions for the AI-accelerator solutions, leaving behind all the concerns we mentioned above. At this point, the competition could offer alternatives to Hopper architecture, leaving Nvidia with the potential to skim the cream from the data center’s GPU market with the upcoming next-generation Blackwell chips. With this in mind, I believe that the company could maintain closer to mid-double-digit growth numbers in data center revenue, especially once hyperscalers and other cloud GPU providers start deploying leading-edge AI hardware to handle more demanding models.